Modern center of excellence

Written by Jared Hillam

The quintessential application of cloud data technologies lies in their ability to transform raw data into actionable insights, a process integral to both artificial intelligence systems and human decision-making. However, as the cloud data industry matures, we are beginning to witness a period of architectural consolidation. While there remains some degree of rearrangement, the current phase largely represents the late adopters catching up. This transition signals a critical juncture for organizations: the need to shift focus toward stabilizing and optimizing the cost-efficiency of their cloud data operations. Analogous to how automobile manufacturers progress from crafting prototypes to establishing streamlined production models, organizations must navigate the evolution of their cloud data frameworks from experimental to operational stability. This progression is essential for harnessing the full potential of cloud data systems, ensuring they not only serve their primary purpose but do so in a manner that is both efficient and scalable.

This pivotal moment heralds the establishment of a Center of Excellence (CoE). The idea of a CoE is as venerable as the concept of scalability. It addresses a fundamental question: How can we devise a framework for individuals that not only maximizes the use of technology but also cultivates a culture of innovation and efficiency? A CoE serves as a beacon, guiding organizations through the complexities of technological adoption and operational optimization. It is about creating a symbiosis between human ingenuity and technological prowess, ensuring that the latter is leveraged to its fullest potential. This is not merely about adopting new tools or processes; it is about embedding these advancements into the very fabric of the organization's culture, promoting continuous improvement, knowledge sharing, and strategic foresight. In the context of cloud data management, a CoE becomes an indispensable conduit for translating technological capabilities into actionable business value, fostering an environment where data-driven decision-making is not just encouraged but becomes the norm.

To some, the concept of a Center of Excellence may evoke a sense of nostalgia, reminiscent of a bygone era where structured frameworks and processes ruled the corporate world. This perspective might stem from the belief that the advent of cloud computing, with its promise of limitless resources, rendered traditional organizational structures obsolete. There's a certain appeal to the idea that the boundless capacity of the cloud could liberate us from the constraints of old methodologies.

However, this view overlooks a crucial reality: despite the vast computational resources available in the cloud, companies that have not proactively managed these resources find themselves entangled in a web of escalating compute costs. This challenge is agnostic to the choice of cloud provider; the underlying issue of cost management persists across the board. The misconception that cloud computing inherently eliminates the need for organizational efficiency fails to account for the complexities of effectively leveraging these technologies.

Thus, for organizations that have heavily invested in cloud infrastructures, the establishment of a Center of Excellence is not a regression but a strategic imperative. It represents a commitment to refining and optimizing the use of cloud resources to ensure that investments are not only justified but are actively delivering maximum value. In this light, a Center of Excellence is a forward-looking strategy that enables organizations to harness the full potential of their cloud infrastructure, ensuring that they remain competitive in an increasingly data-driven landscape. It's about marrying the innovative possibilities of cloud computing with disciplined, strategic management to achieve sustainable growth and efficiency.

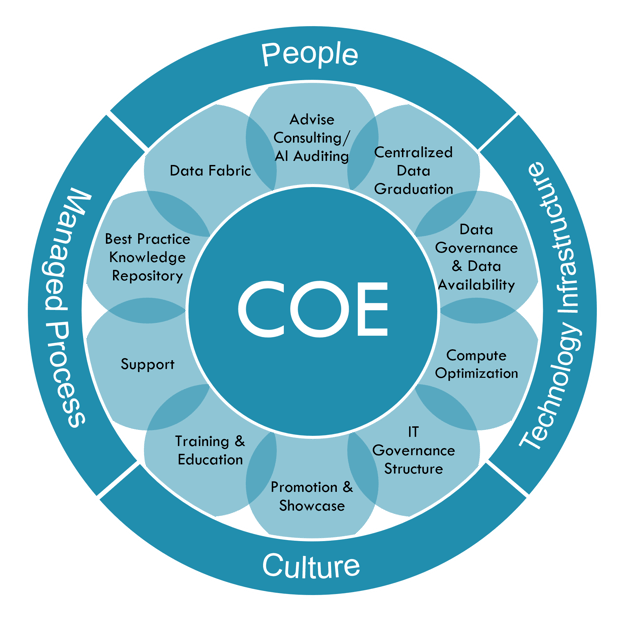

In the current landscape, a Center of Excellence (CoE) is pivotal for steering organizational strategy and operational efficiency, especially in harnessing the transformative power of cloud data management. It acts as the cornerstone for integrating technology with business processes, driving innovation, and fostering a culture of continuous improvement.

As we delve into the modern pillars that define today's CoE, it's essential to understand how these foundational elements interplay to create a resilient and forward-thinking entity within the organization. These pillars are not just isolated tenets but are interconnected, reinforcing the CoE's role in maximizing the potential of cloud data systems and ensuring that technology investments align with business objectives. Let's explore just 6 of these pillars, highlighting their significance and positioning within the broader context of the CoE's mission.

Access Control

Access Control Automation stands as a cornerstone in the architecture of a modern Center of Excellence, emphasizing its paramount importance in guiding desired outcomes. This pillar is foundational because it directly tackles the challenges posed by the vast, ostensibly boundless nature of cloud data systems. By integrating access control mechanisms dynamically with application processes—such as hiring, firing, promotion, and demotion—organizations can achieve a dual objective: mitigating behavioral issues and curbing unnecessary computational waste.

Access Control Automation stands as a cornerstone in the architecture of a modern Center of Excellence, emphasizing its paramount importance in guiding desired outcomes. This pillar is foundational because it directly tackles the challenges posed by the vast, ostensibly boundless nature of cloud data systems. By integrating access control mechanisms dynamically with application processes—such as hiring, firing, promotion, and demotion—organizations can achieve a dual objective: mitigating behavioral issues and curbing unnecessary computational waste.

The rationale behind prioritizing Access Control Automation lies in its ability to enforce a disciplined, secure, and efficient operational environment. It ensures that only authorized personnel have access to specific data sets and computational resources, aligning access privileges with the current organizational role and status of the employee. This dynamic approach to access control not only enhances security by preventing unauthorized data access but also optimizes resource allocation, ensuring that computational power is utilized by those who genuinely need it, in accordance with their current responsibilities and projects.

Implementing Access Control Automation as a fundamental pillar of a Center of Excellence translates into a more streamlined, secure, and cost-effective cloud data management strategy. It serves as a proactive measure to manage the inherent complexities and potentials of cloud systems, demonstrating a strategic alignment of technological capabilities with organizational governance and operational efficiency. This, in turn, fosters a culture where technology empowers organizational agility and growth, rather than becoming a source of unbridled consumption and potential vulnerability.

Data Graduation

Data Graduation stands as another fundamental pillar, emphasizing the strategic handling of data from its inception to its ultimate use. This concept involves categorizing data into various levels of refinement—from raw, unprocessed data to highly aggregated and analyzed information. By integrating Data Graduation with Access Control, organizations can ensure that computational resources are meticulously allocated, matching the complexity and sensitivity of data with the appropriate level of access. This alignment prevents the squandering of valuable compute resources on queries or access levels that do not correspond with the data's stage of readiness or the user's need for information. It's a methodology that not only enhances efficiency but also supports a culture of responsible data management, ensuring that every query and access request is both justified and optimized for the data's current state. This pillar reinforces the CoE's role in establishing a disciplined yet flexible framework for data access and utilization, ensuring that data serves its highest purpose at every stage of its lifecycle.

Data Graduation stands as another fundamental pillar, emphasizing the strategic handling of data from its inception to its ultimate use. This concept involves categorizing data into various levels of refinement—from raw, unprocessed data to highly aggregated and analyzed information. By integrating Data Graduation with Access Control, organizations can ensure that computational resources are meticulously allocated, matching the complexity and sensitivity of data with the appropriate level of access. This alignment prevents the squandering of valuable compute resources on queries or access levels that do not correspond with the data's stage of readiness or the user's need for information. It's a methodology that not only enhances efficiency but also supports a culture of responsible data management, ensuring that every query and access request is both justified and optimized for the data's current state. This pillar reinforces the CoE's role in establishing a disciplined yet flexible framework for data access and utilization, ensuring that data serves its highest purpose at every stage of its lifecycle.

Compute Optimization

Compute Optimization emerges as a pivotal pillar within a Center of Excellence focused on cloud data management, challenging the oft-held assumption that cloud data platforms inherently ensure optimal computational efficiency. Despite the advanced capabilities and promises of automatic optimization touted by cloud data services, empirical evidence suggests that significant performance enhancements—sometimes exceeding 1000%—can be achieved through meticulous refinement of query strategies. This realization underscores the necessity for organizations to adopt a proactive, strategic approach to managing their computational resources.

Compute Optimization emerges as a pivotal pillar within a Center of Excellence focused on cloud data management, challenging the oft-held assumption that cloud data platforms inherently ensure optimal computational efficiency. Despite the advanced capabilities and promises of automatic optimization touted by cloud data services, empirical evidence suggests that significant performance enhancements—sometimes exceeding 1000%—can be achieved through meticulous refinement of query strategies. This realization underscores the necessity for organizations to adopt a proactive, strategic approach to managing their computational resources.

The essence of Compute Optimization lies in the establishment of an internal practice dedicated to identifying and restructuring the most inefficient and resource-intensive queries. By targeting these "egregiously egregious" queries, organizations can unlock dramatic improvements in performance and efficiency. This process is not merely a technical exercise but a strategic investment that yields substantial, measurable returns, often far outweighing the operational costs of maintaining such a specialized team.

This pillar highlights the importance of a deliberate, hands-on approach to leveraging cloud computing resources, moving beyond reliance on automated optimizations provided by cloud services. It calls for a culture of continual improvement and optimization, where the ongoing assessment and enhancement of computational practices are ingrained in the organizational ethos. Through Compute Optimization, a Center of Excellence ensures that cloud data management is not just about maintaining operations but about elevating them to new heights of efficiency and effectiveness, demonstrating a clear and direct impact on the organization's bottom line.

AI Auditing

AI Auditing stands as a crucial pillar within the framework of a Center of Excellence for cloud data management, embodying a bidirectional approach to ensuring code quality and efficacy. This principle extends beyond traditional auditing methodologies by incorporating both artificial intelligence and human oversight into the review process. For instance, systems like ChatGPT, which can generate code compatible with platforms such as Snowflake and Databricks, exemplify the intersection where AI's capabilities meet human expertise. This dual-layered auditing process not only aims to leverage AI for enhancing code efficiency and innovation but also underscores the indispensable role of human expertise in overseeing and refining AI-generated outputs.

AI Auditing stands as a crucial pillar within the framework of a Center of Excellence for cloud data management, embodying a bidirectional approach to ensuring code quality and efficacy. This principle extends beyond traditional auditing methodologies by incorporating both artificial intelligence and human oversight into the review process. For instance, systems like ChatGPT, which can generate code compatible with platforms such as Snowflake and Databricks, exemplify the intersection where AI's capabilities meet human expertise. This dual-layered auditing process not only aims to leverage AI for enhancing code efficiency and innovation but also underscores the indispensable role of human expertise in overseeing and refining AI-generated outputs.

The rationale behind AI Auditing is two-fold. On one hand, it allows for the harnessing of AI's potential to identify inefficiencies, suggest optimizations, and even preemptively flag potential errors within human-written code, thereby opening avenues for enhanced performance and innovative problem-solving strategies. On the other hand, it necessitates a deep, nuanced understanding from human operators, who must critically evaluate AI-generated code. This critical oversight is essential, as AI, despite its advanced capabilities, may not fully grasp the complex, contextual considerations of each unique application. The sophistication of AI outputs, which can impress laypersons with their technical articulation, further amplifies the need for knowledgeable human intervention to discern optimal from suboptimal solutions.

AI Auditing, therefore, is not just about implementing an additional layer of quality control; it's about fostering a symbiotic relationship between human intelligence and artificial intelligence. This approach not only mitigates risks and averts costly errors but also propels organizations towards adopting more advanced, efficient, and innovative coding practices. By embedding AI Auditing into the core operations of a Center of Excellence, organizations can ensure that their use of technology is both cutting-edge and grounded in solid, expert understanding, thus maximizing the benefits of their cloud data management initiatives.

Organizational Structure

The question of data ownership within an organization is a nuanced and often contentious issue, serving as a critical pillar in the establishment of a Cloud Data Management Center of Excellence. The delineation of ownership—whether data is considered an asset under the purview of IT departments or as integral to business operations—has profound implications on the perception, utilization, and governance of data across the organization. This pillar emphasizes the need for a clear, strategic approach to data stewardship, one that transcends traditional departmental silos and fosters a unified perspective on data as a valuable enterprise resource.

The question of data ownership within an organization is a nuanced and often contentious issue, serving as a critical pillar in the establishment of a Cloud Data Management Center of Excellence. The delineation of ownership—whether data is considered an asset under the purview of IT departments or as integral to business operations—has profound implications on the perception, utilization, and governance of data across the organization. This pillar emphasizes the need for a clear, strategic approach to data stewardship, one that transcends traditional departmental silos and fosters a unified perspective on data as a valuable enterprise resource.

The resolution to the ownership question significantly influences the design and reception of data management solutions. When data is viewed through the lens of IT, the focus may lean more toward technical aspects such as security, storage, and accessibility. Conversely, if data is regarded as a business asset, the emphasis shifts towards data analytics, insights generation, and strategic decision-making. The optimal approach advocates for a collaborative framework where IT and business units coalesce around a shared vision, recognizing the multifaceted value of data.

By establishing a governance model that reflects a balanced partnership between IT and business stakeholders, organizations can ensure that data management strategies are both technically sound and aligned with business objectives. This collaborative structure not only mitigates conflicts over data ownership but also enhances the organization’s agility, enabling a more dynamic and effective use of data to drive innovation and achieve competitive advantage

Training & Education

The Training & Education pillar is frequently underestimated by stakeholders who assume its importance is a given. However, the reality is far more nuanced and critical, especially in the context of cloud data management. The direct correlation between the proficiency of users in crafting queries and the organization's compute consumption—and by extension, its cost structure—cannot be overstated. Within the intricate landscape of cloud data ecosystems, populated by an unbound array of data objects and users, prioritizing comprehensive user training becomes just as critical as managing the data objects themselves. This focus on users is key to avoiding the misuse of compute resources.

The Training & Education pillar is frequently underestimated by stakeholders who assume its importance is a given. However, the reality is far more nuanced and critical, especially in the context of cloud data management. The direct correlation between the proficiency of users in crafting queries and the organization's compute consumption—and by extension, its cost structure—cannot be overstated. Within the intricate landscape of cloud data ecosystems, populated by an unbound array of data objects and users, prioritizing comprehensive user training becomes just as critical as managing the data objects themselves. This focus on users is key to avoiding the misuse of compute resources.

This pillar underscores the vital need for a strategic approach to training that goes beyond the basics, addressing the intricacies of query optimization and efficient data use within the user's purview. Without this focus, organizations risk falling into a cycle of reactive management, contending with compute overruns as a consequence of untrained or undertrained users operating in an environment where computational resources seem boundless. The shift from the constrained computing capacities of traditional Massively Parallel Processing (MPP) databases to the virtually limitless environments of today's cloud platforms has magnified this risk.

The solution is not solely in the implementation of programmatic guardrails, which, while useful, address only the symptoms of the underlying issue. Ideally, teams should get tailored training that speaks to the assets in their ecosystem. This is often done with dynamic templates Intricity brings.

Standardizing for Scale

We have moved beyond the initial phase of rapid adoption and integration, commonly characterized by a "rip and replace" mentality. Today, the focus has transitioned towards standardization and optimization. This evolution underscores the importance of refining existing cloud environments to enhance efficiency, reduce costs, and maximize the value derived from cloud investments. As organizations navigate this mature phase of cloud integration, the principles of standardization and continuous optimization become critical for sustaining growth, competitiveness, and innovation in a cloud-centric world.

Who is Intricity?

Intricity is a specialized selection of over 100 Data Management Professionals, with offices located across the USA and Headquarters in New York City. Our team of experts has implemented in a variety of Industries including, Healthcare, Insurance, Manufacturing, Financial Services, Media, Pharmaceutical, Retail, and others. Intricity is uniquely positioned as a partner to the business that deeply understands what makes the data tick. This joint knowledge and acumen has positioned Intricity to beat out its Big 4 competitors time and time again. Intricity’s area of expertise spans the entirety of the information lifecycle. This means when you’re problem involves data; Intricity will be a trusted partner. Intricity's services cover a broad range of data-to-information engineering needs:

What Makes Intricity Different?

While Intricity conducts highly intricate and complex data management projects, Intricity is first a foremost a Business User Centric consulting company. Our internal slogan is to Simplify Complexity. This means that we take complex data management challenges and not only make them understandable to the business but also make them easier to operate. Intricity does this through using tools and techniques that are familiar to business people but adapted for IT content.

Thought Leadership

Intricity authors a highly sought after Data Management Video Series targeted towards Business Stakeholders at https://www.intricity.com/videos. These videos are used in universities across the world. Here is a small set of universities leveraging Intricity’s videos as a teaching tool:

Talk With a Specialist

If you would like to talk with an Intricity Specialist about your particular scenario, don’t hesitate to reach out to us. You can write us an email: specialist@intricity.com

(C) 2023 by Intricity, LLC

This content is the sole property of Intricity LLC. No reproduction can be made without Intricity's explicit consent.

Intricity, LLC. 244 Fifth Avenue Suite 2026 New York, NY 10001

Phone: 212.461.1100 • Fax: 212.461.1110 • Website: www.intricity.com

Sample@gmail.com appears invalid. We can send you a quick validation email to confirm it's yours