“Primum non nocere” (latin for First Do No Harm) is part of the Hippocratic Oath dictated by every doctor, but perhaps it should be an oath given by IT professionals. This is particularly the case when it comes to data management.

Your data management systems represent your organization more than any other kind of system. They carry your company’s combined logic for how it interprets the ocean of data. That technical and business logic is gold, and isn’t readily replaceable. If you’ve built your data management layers correctly their logic should outlast your ERP’s, CRM’s and any other applications that come and go. But that doesn’t mean the systems that house this logic never get an upgrade. Keeping up to date with modern technology helps your organization make the most of its precious data assets. But how do you upgrade a Data Warehouse while people are leveraging it daily for reports and analytics? How do you ensure that you “Do No Harm”?

Replicate

Data Replication

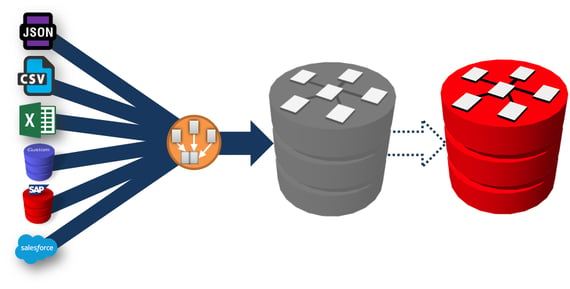

Replication is a technology which aims to mimic source data (and structure) to a target database. Thus imagine you have data in Salesforce.com and you use a replication solution to clone that data into SQL Server. The SQL Server instance would then be a 1 to 1 mirror of the dataset in salesforce.com. If there was a table change or newly added data, the replication tool will control the SQL Server instance to ensure it is synced up with all those changes. Replication solutions have come a long way in the last 10 years, and today they are available as cloud based services which greatly simplify the steps involved.

Replicating the Data Warehouse

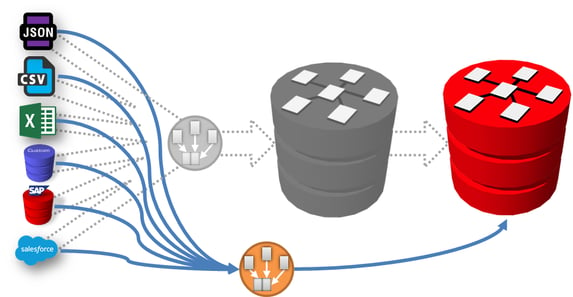

By replicating the legacy Data Warehouse onto a modern Data Warehousing platform, we can quickly adopt the new platform as if it were in full production. Meaning, we can route the existing BI solutions to the new Data Warehouse, and since all the schemas and data sets are the same as the old Data Warehouse, the change is transparent to the Analytics and Reporting user communities.

Now this does mean that we still have the old Data Warehouse in production, but it also means that we now have bought ourselves time to reroute all the logic so it points directly to the new data warehousing platform. Also the old Data Warehouse won’t likely need the hardware capacity it required in the past as it isn’t being hit up with daily queries.

Level of Effort

With the pressure released to make the migration occur, the next question is how difficult will the migration be? There are a number of factors that play into predicting the level of effort.

- Use of Database Specific Functions: Some MPP vendors have packaged analytic solutions. In these cases there is effort in unwinding those custom analytic functions to determine the outputs and represent them in SQL.

- Use of ETL Tool: If the tools being leveraged support both Data Warehousing solutions then, potentially, we can simply make a target database change.

- Use of Pushdown Optimization (ELT): Many data integration solutions leverage ELT as a data transformation method. This means that the modifications to the data are coupled to the target Data Warehouse. This doesn’t necessarily hint that it will extend the level of effort. But it does place great reliance on the integration vendor’s pushdown optimization capabilities and the legacy database. Thus it means we need to spend time ensuring there are no discrepancies between databases.

- Modeling: If the data is not being transformed to conform to a model, then in essence you don’t have a Data Warehouse. Thus in this case what you likely have is a series of replicated tables into what would be more aptly called a Data Lake. Data Lakes are fairly straight forward to migrate as we are just changing the replication destination and not dealing with any transformations. If the Data Lake is being queried via a series of views then there will be work in ensuring the views can be migrated.

Planning it Out

To kick off these migrations Intricity conducts a strategy engagement to map out the most effective migration path based on the existing landscape. The engagement includes a few critical components:

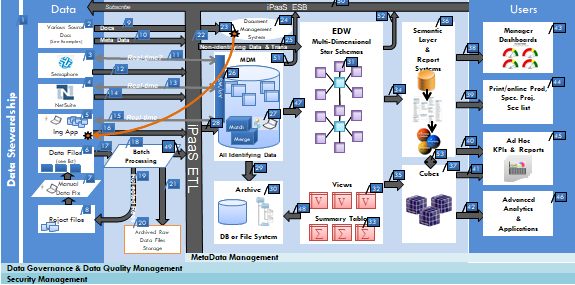

Reference Migration Architecture

Intricity draws out the stages of the phased plan to migrate the Data Warehouse, this acts as a blueprint for the effort, and includes the replication steps as well as the transitioning steps to completing the migration. Additionally, this planning step is often a good time to evaluate the other adjoining components to the Data Warehousing environment. Intricity draws out these components into the Reference Architecture.

Phased Migration Roadmap

Using the assets from the Reference Migration Architecture, Intricity outlines the phases the organization needs to plan for. This includes the replication and a detailed breakdown of the approach being taken to convert the existing integration path to the new Data Warehouse. Each of these phases is priced so that the organization can effectively budget the migration.

Who is Intricity?

Intricity is a specialized selection of over 100 Data Management Professionals, with offices located across the USA and Headquarters in New York City. Our team of experts has implemented in a variety of Industries including, Healthcare, Insurance, Manufacturing, Financial Services, Media, Pharmaceutical, Retail, and others. Intricity is uniquely positioned as a partner to the business that deeply understands what makes the data tick. This joint knowledge and acumen has positioned Intricity to beat out its Big 4 competitors time and time again. Intricity’s area of expertise spans the entirety of the information lifecycle. This means when you’re problem involves data; Intricity will be a trusted partner. Intricity's services cover a broad range of data-to-information engineering needs:

What Makes Intricity Different?

While Intricity conducts highly intricate and complex data management projects, Intricity is first a foremost a Business User Centric consulting company. Our internal slogan is to Simplify Complexity. This means that we take complex data management challenges and not only make them understandable to the business but also make them easier to operate. Intricity does this through using tools and techniques that are familiar to business people but adapted for IT content.

Thought Leadership

Intricity authors a highly sought after Data Management Video Series targeted towards Business Stakeholders at https://www.intricity.com/videos. These videos are used in universities across the world. Here is a small set of universities leveraging Intricity’s videos as a teaching tool:

Talk With a Specialist

If you would like to talk with an Intricity Specialist about your particular scenario, don’t hesitate to reach out to us. You can write us an email: specialist@intricity.com

(C) 2023 by Intricity, LLC

This content is the sole property of Intricity LLC. No reproduction can be made without Intricity's explicit consent.

Intricity, LLC. 244 Fifth Avenue Suite 2026 New York, NY 10001

Phone: 212.461.1100 • Fax: 212.461.1110 • Website: www.intricity.com