You’re probably familiar with the concept of a Noun and a Verb. A noun represents a person, place, or thing. A verb represents the action that a person, place, or thing participates in. In many languages, this concept is introduced in grade school.

If you can make this distinction then you have some of the fundamental tools to describe Master Data and Transaction Data. Master Data represents the people places or things that an organization needs to manage. Whereas Transaction Data represents the events that your organization is engaged in. The problems presented by Master Data vs Transaction Data are really what Master Data Management and Data Warehousing are all about.

First, let's talk about some of the common problems presented by both Master Data and Transaction Data.

Master Data

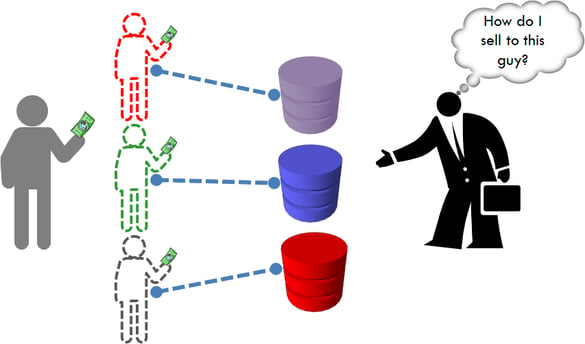

I'm going to tell you a story about a start-up that turns into a large multinational company. When they first get started they have a single customer tracking system. Over time, they begin to add a few more systems. First, they add a CRM, then they add a Support Management system, then their first ERP, then a Marketing Automation system, and a host of custom web applications. Imagine I'm a customer of this startup in its early years. If the startup wanted to find me they could easily search for my phone number or name, and a unique record of me would appear from the one system that housed my information. But let's rerun this experiment a few years later with the now multinational organization. Where does my record exist? If I've continued to do business with this company then my records would be in every one of the systems that they had adopted over the years. Now, it's wishful thinking to assume that my contact information was entered into each new system consistently. More realistically, my customer information would have slight variations from system to system. Sometimes my info might be spelled wrong or be wrong altogether. This turns out to be one of the major problems with Master Data. The fluidity of customer engagement and the updating of records is a constant event within organizations. However, these changes in customer records happen within the silos of each application system. The larger a company gets, the more dispersed customer records become. This dilution of the customer identity becomes a growing barrier the larger the organization gets. Imagine trying to have a relationship with a customer whose identity you couldn’t really rely on.

I’ve focused on the customer problem, but Master Data covers a wide array of different nouns an organization would be interested in being consistent like Products and Assets. Additionally, there are data assets that are inherited (usually external to the organization), but yet the organization needs to maintain and be consistent. Examples are location (like zip codes), external corporation names, and even calendars. Over the years, more and more data is being transitioned to the reference data category. For example, in the early years of maintaining master data, organizations often made significant efforts to standardize corporation names within their systems. However, today those names are supplied by external data brokerage companies that specialize in such standardization. The same is true for records of individuals today. While the organization still needs to ensure that such records are maintained, the golden version of that customer record is maintained by external sources, then simply enriched by the existing data systems within the organization.

Resolving Identity

In theory, organizations could fix their Master Data Management problems by becoming militant about processes. If every entry point at every data system was consistently designed, handled, defined, and deployed, MDM wouldn’t even be an industry. No such organization exists. Not even close. However, that’s not to say that process can’t play a big role in fixing major portions of the identity problem. For example, even if an organization agrees on what a customer actually represents (yes even this is a challenge for many organizations) they can begin to get a lot more consistent about how to handle records. Most data governance effort starts with this type of non-mechanized intervention. However, at some point, the desired changes require some tooling to actually set corrected data practices in motion. This is where Master Data Management comes into the picture.

Master Data Management

MDM delivers a database that is designed to store the unique nouns that are important to an organization. On top of that database is an automation framework that defines how those unique records are to be updated. Connected to that automation framework are all the data systems which applications are updating on a daily basis. When a customer record is updated, the MDM system evaluates the update in relation to the existing customer records to determine whether the update should be made to the “golden record”.

Don’t get starry-eyed about my use of the word “automation”. There is a ton of work around building the commensurate logic that drives that automation. Not to mention the ongoing political battles to design it and keep it maintained.

Challenges

One of the biggest challenges organizations face in deploying MDM is the perception of the value of the solution. Inconsistency problems in data are something that decision-makers are often unaware of. On top of that, corporate leaders have a hard time envisioning things being much different with an MDM solution in place. Often it takes a train wreck to get the attention of stakeholders to launch an MDM project. For this reason, MDM solutions should be approached with very clear value statements (preferably with limited scope) which the organization projects to obtain.

Transaction Data

Transaction data is the constant stream of events and interactions in which an organization is engaged. (Verbs) Transaction data is said to be “highly volatile” data as it’s difficult to predict what mix of verbs will make up the transaction. These could be online purchases as well as brick-and-mortar ones. Each purchase might use a different credit card and have different items.

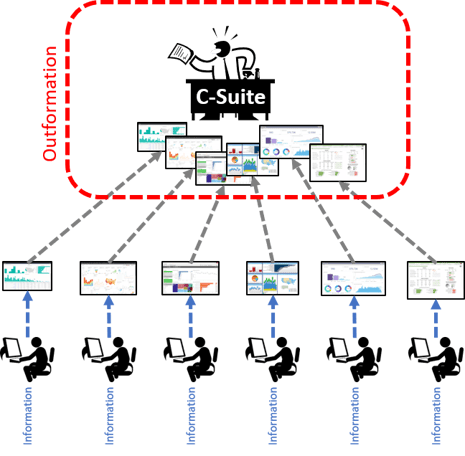

To really interpret these events you need to get up close and personal to determine what is going on and how it impacts the business. This is simple if you only have one event, but in a large organization, you have many events interacting with each other to create desired outcomes. This makes the process of turning data into information a much more complex endeavor. When you break up the word in-formation it tells you a lot about what information is. The reason why data isn’t information is because it hasn’t been formed into an insight or something you can take action on.

The gap between data and information can only be filled by producing the query logic necessary to ultimately form the nouns and verbs necessary to produce insights. That logic MUST live somewhere. Commonly this logic lives in the heads of independent data analysts. As organizations scale, the dispersion of that logic living in people’s heads begins to be a problem. One person's formation of data may differ from another person’s formation of data. So what we begin to see is “out” formation or too many versions of truth. This might not be visible to the individual data analyst and his/her direct report. However, the further up the chain the analysts' information moves, the further outformation it becomes to other analyses.

The gap between data and information can only be filled by producing the query logic necessary to ultimately form the nouns and verbs necessary to produce insights. That logic MUST live somewhere. Commonly this logic lives in the heads of independent data analysts. As organizations scale, the dispersion of that logic living in people’s heads begins to be a problem. One person's formation of data may differ from another person’s formation of data. So what we begin to see is “out” formation or too many versions of truth. This might not be visible to the individual data analyst and his/her direct report. However, the further up the chain the analysts' information moves, the further outformation it becomes to other analyses.

Putting the Data In-Formation

Data Warehousing

The Data Warehouse is a database that is modeled to relate the nouns and verbs to each other in a way that puts the data in-formation consistently. Thus no matter who or what queries the data warehouse they will get consistent results. This formation enables organizations to have “superorganism” like abilities, as decisions about the collective behaviors can be made/changed.

There are two ways for attacking the formation challenge in a Data Warehouse. Bottom-up, or top-down. The bottom-up approach focuses on the data sources and what they provide, then deriving information assets from those sources. This method provides more project autonomy (less business involvement) but introduces huge user adoption risks. Not only are the requirements not explicitly identified, but because the business stakeholders weren’t involved they often won’t use it.

There are two ways for attacking the formation challenge in a Data Warehouse. Bottom-up, or top-down. The bottom-up approach focuses on the data sources and what they provide, then deriving information assets from those sources. This method provides more project autonomy (less business involvement) but introduces huge user adoption risks. Not only are the requirements not explicitly identified, but because the business stakeholders weren’t involved they often won’t use it.

The top-down approach focuses on the stakeholders and derives the model and logic in the Data Warehouse from those requirements. The advantage of the top-down approach is greater user adoption of the solution. This obviously means that there is a spotlight on the project at all times.  Some IT organizations would prefer to avoid this attention. Top-down is typically Intricity’s preferred approach because it gets to the central in-formation need by focusing on the benefactors.

Some IT organizations would prefer to avoid this attention. Top-down is typically Intricity’s preferred approach because it gets to the central in-formation need by focusing on the benefactors.

Challenges

One of the biggest challenges that organizations face in driving adoption of a Data Warehouse is the perception that buying a Business Intelligence (BI) tool would solve the need. If you compare the process of building your information backbone to building a house, then data integration and data management would represent the foundation, framing, plumbing, electrical, AC, and roofing. The BI would be the interior design and furnishings. When people come to your home you rarely get compliments on how good your plumbing is. But if its plumbing is bad your elaborate decorations would come coupled with a smelly motif.

Business Stakeholders however are often unaware of this, and they end up building décor (Business Intelligence deployments) before the foundation (Data Warehouse) of the house is even in place.

Complementary Solutions

While the title of this white paper is DW vs MDM, these two solutions are nowhere in conflict with each other. Having MDM is in place greatly enhances a Data Warehousing deployment as we are ensured consistent nouns to which we can relate the verbs to. Neither of these solutions is cheap. Both should be considered “programs” and not “projects.”

Often organizations will hobble along without one or the other. However, in any case, the results are sub-optimal. The key to moving these projects is timing, and getting the right sequence of wins at the right time. Often there are intermediary steps that will still provide value both now and when the end solution architecture is in place. Intricity can help guide the right path forward to establish the project sequence to deliver value along the way and keep the project phases focused.

Who is Intricity?

Intricity is a specialized selection of over 100 Data Management Professionals, with offices located across the USA and Headquarters in New York City. Our team of experts has implemented in a variety of Industries including, Healthcare, Insurance, Manufacturing, Financial Services, Media, Pharmaceutical, Retail, and others. Intricity is uniquely positioned as a partner to the business that deeply understands what makes the data tick. This joint knowledge and acumen has positioned Intricity to beat out its Big 4 competitors time and time again. Intricity’s area of expertise spans the entirety of the information lifecycle. This means when you’re problem involves data; Intricity will be a trusted partner. Intricity's services cover a broad range of data-to-information engineering needs:

What Makes Intricity Different?

While Intricity conducts highly intricate and complex data management projects, Intricity is first a foremost a Business User Centric consulting company. Our internal slogan is to Simplify Complexity. This means that we take complex data management challenges and not only make them understandable to the business but also make them easier to operate. Intricity does this through using tools and techniques that are familiar to business people but adapted for IT content.

Thought Leadership

Intricity authors a highly sought after Data Management Video Series targeted towards Business Stakeholders at https://www.intricity.com/videos. These videos are used in universities across the world. Here is a small set of universities leveraging Intricity’s videos as a teaching tool:

Talk With a Specialist

If you would like to talk with an Intricity Specialist about your particular scenario, don’t hesitate to reach out to us. You can write us an email: specialist@intricity.com

(C) 2023 by Intricity, LLC

This content is the sole property of Intricity LLC. No reproduction can be made without Intricity's explicit consent.

Intricity, LLC. 244 Fifth Avenue Suite 2026 New York, NY 10001

Phone: 212.461.1100 • Fax: 212.461.1110 • Website: www.intricity.com