I can’t say WHEN this will happen, but some day, data integration will be a completely automated process. Having been in the Data Management space for over 10 years I feel a little embarrassed that we’re not even close to that dream. To date, we have autonomous cars (http://en.wikipedia.org/wiki/Google_driverless_car ) that can improvise through the complexities of cities, as well as autonomous planes, including recent ones that can land on aircraft carriers. (http://www.popsci.com/technology/article/2012-07/i-am-warplane) And we’ve had these technologies for quite a while. Now transition your mind to the data integration space, and you’ll start to see why I’m a little embarrassed about our industry. This is an environment surrounded by stability (compared to automatically landing on an aircraft carrier) but we can’t manage to deliver data integration without an army of consultants.

I’ve over simplified this problem on purpose. There ARE some reasons we have been slow in our industry to automate the process. However, NONE of them are unmanageable:

1.You have to UNDERSTAND your source and your target to bring them together:

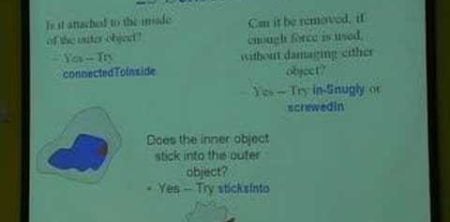

Movement of data, whether it be for Application Integration or Data Warehousing, requires knowledge about what the data is. Not just what the data type is, but what the data is FOR. For example, when we integrate data between applications we need to understand the business process for the application databases so our integration efforts do not disrupt those applications. This then needs to translate into mapping logic that can transform the data from one location to another.

So this “future system” would need to truly understand business processes and data structures, then deduce its data integration logic from regular scans.

2.You have to KNOW things about the world to generate Business Intelligence oriented data models:

Data Integration is useless if you can’t design it for human consumption through Business Intelligence. So I can’t talk about Data Integration without addressing the delivery of data to the business.

Humans KNOW things, both simple and complex. This is fundamental in logically defining data models about our world. While it might sound outlandish to hear of a computer “knowing” things, it’s actually been around for a long time. MANY Artificial Intelligence studies and solutions exist on the market today (http://en.wikipedia.org/wiki/Artificial_intelligence) and have been in existence for quite some time. Back in 2006 the CEO of Cycorp presented on the topic, along with His organizations solution, to a group at Google. (http://www.youtube.com/watch?v=KTy601uiMcY) More recently, this “knowledge” about our world was on display by IBM’s artificial intelligence system called Watson. (http://www.watson.ibm.com/index.shtml)

The level and depth of this knowledge for generating data models does not need to be anywhere near as wide in scope as the IBM Watson project. In fact, the rules and goals between businesses are mostly the same, as most data architects discover over multiple projects.

Therefore, this “future system” would need to model logical representations of data which could be leveraged for Business Intelligence tools. Could it go further and just deliver the information pertinent to the business needs, sure. But let’s take this one step at a time.

3.You have to KNOW things about the world to generate Master Data Management Hubs:

Not only do we need to present data to the business, but we need an elegant way of maintaining its consistency and synchronization. The very same elements that make up the arguments for #2 apply to generating data models to manage the survival of duplicate records. The engines for determining duplicate records already exist on the market. However, the architecture to support those engines and do something with their result takes a significant amount of time and resources to implement. This takes me to my next item…

4.You have to USE best practices when architecting a solution:

There are many ways to skin a cat, so the saying goes. However, there is always a “best” way to do something. And the same is obviously true when it comes to data architectures. For example, it might make sense to stage data before loading it to target. Now this is where I believe the power of an automated system would really present itself. Because an automated system can test and retest without tiring out, or costing the organization more money, a truly optimal architecture could be obtained.

So this “future system” would need to know current best practices for moving data in different circumstances, then be able to derive an approach through automated or simulated testing.

I know I’m just scratching the surface on this. However, the industry needs to start taking baby steps in this direction. The data growth is exploding and businesses are needing a lot more flexibility to acquire and change.

What would this system look like?

What I envision is a system that literally can be purchased as a hardware device, plugged in, configured to the environmental variables, and unleashed. The focus would be intersystem data integration (where necessary), data warehousing, and master data management. The system would sample test and retest architecture configurations with full database control for staging, warehousing, and master data. And it would provide override controls for the business to intervene in the decision process and it would evaluate that override for future use.

Sounds crazy? Ya, just like a plane landing by itself on an aircraft carrier. And yes, it would take a fortune to design, but I believe that we already have the tools to do it.

Jared Hillam, EIM Practice Director, Intricity LLC